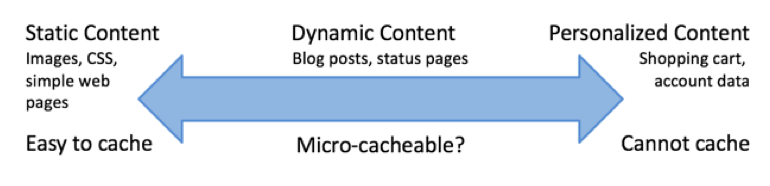

The benefits of caching are two‑fold: caching improves web performance by delivering content more quickly, and it reduces the load on the origin servers. The effectiveness of caching depends on the cacheability of the content. For how long can we store the content, how do we check for updates, and how many users can we send the same cached content to?

Caching static content, such as images, JavaScript and CSS files, and web content that rarely changes is a relatively straightforward process. Cache updates can be handled by regular timeouts,conditional gets and, if necessary, cache‑busting techniques to change the URL of the referenced object.

Microcaching of Dynamic Content

Microcaching is a caching technique whereby content is cached for a very short period of time, perhaps as little as 1 second. This effectively means that updates to the site are delayed by no more than a second, which in many cases is perfectly acceptable.

Simple Microcaching with NGINX

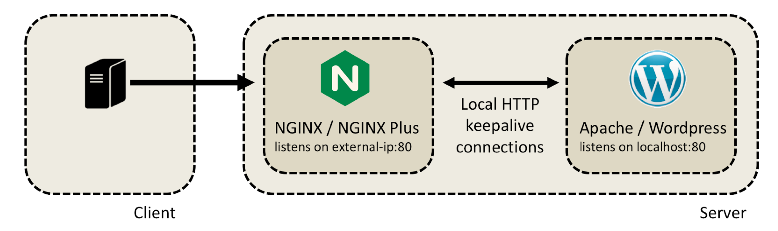

Accelerating this service with NGINX is a two‑step process.

Step 1: Proxy Through NGINX

Install NGINX or NGINX Plus on the WordPress server and configure it to receive incoming traffic and forward it internally to the WordPress server:

The NGINX proxy configuration is relatively straightforward:

server {

listen external-ip:80; # External IP address

We also modify the Apache configuration (listen ports and virtual servers) so that Apache is bound to localhost:80 only.

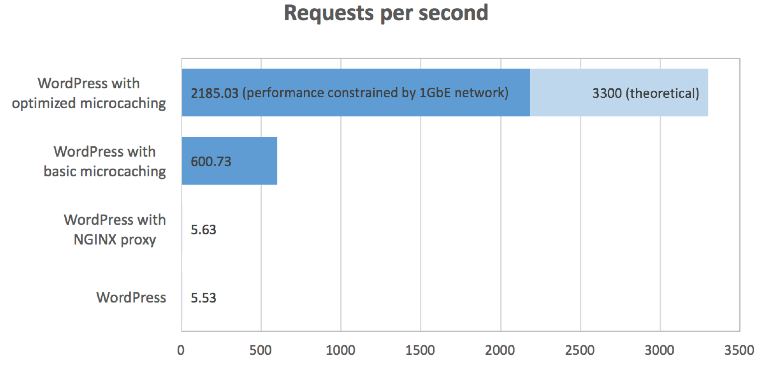

You might think that adding an additional proxying hop would have a negative effect on performance, but in fact, the performance change is negligible:

root@nginx-client:~#

ab -c 10 -t 30 -k http:/

ginx-server/

Requests per second:

5.63

[#/sec] (mean)

Time per request: 1774.708 [ms] (mean)

Time per request: 177.471 [ms] (mean, across all concurrent requests)

Transfer rate: 324.44 [Kbytes/sec] received

On a busier server (handling more concurrent requests), just the keepalive optimizations delivered by NGINX will bring asignificant performance boost.

Step 2: Enable Short‑Term Caching

With addition of just two directives to our server configuration, NGINX or NGINX Plus caches all cacheable responses. Responses with a200OKstatus code are cached for just 1 second.

proxy_cache_path /tmp/cache keys_zone=cache:10m levels=1:2 inactive=600s max_size=100m;

When we rerun the benchmark test, we see a significant performance boost:

root@nginx-client:~#

ab -c 10 -t 30 -k http:/

ginx-server/

Complete requests: 18022

Requests per second:

600.73

[#/sec] (mean)

Time per request: 16.646 [ms] (mean)

Time per request: 1.665 [ms] (mean, across all concurrent requests)

Transfer rate: 33374.96 [Kbytes/sec] received

That’s a 120‑fold performance improvement, from 5 requests per second to 600; this sounds great, but there’s a problem.

The caching is working perfectly, and we can verify that content is refreshed every second (so it’s never out of date), but there’s a something unexpected going on. You’ll observe that there’s a large standard deviation (141.5 ms) in the processing time. CPU utilization is still 100% (as measured byvmstat), andtopshows 10 activehttpdprocesses.

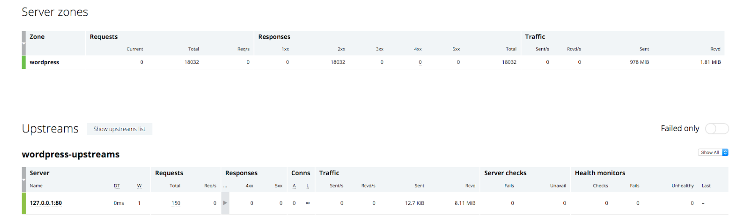

We can get a further hint from the NGINX Plus live activity monitoring dashboard. Before the test:

After the test:

The dashboard reports that NGINX handled 18032 requests during the test (the 18022 reported byab, and 10 requests that were outstanding when the benchmark ended at the 30‑second mark). However, NGINX forwarded 150 requests to the upstream, which is much more than we would expect if we’re caching the content for 1 second during the 30‑second test.

What’s going on? Why the high CPU utilization and the larger‑than‑expected number of cache refreshes?

It’s because each time a cached entry expires, NGINX stops using it. NGINX forwards all requests to the upstream WordPress server until it receives a response and can repopulate the cache with fresh content.

This causes a frequent spike of up to 10 requests to the WordPress servers. These requests consume CPU and have a much higher latency than requests served from the cache, explaining the high standard deviation in the results.

Optimized Microcaching with NGINX

The strategy we want is clear: we want to forward as few requests to the upstream origin server as are required to keep the cache up to date. While the cached content is being updated, we’re happy to serve stale responses (1 or 2 seconds old) from the cache. To achieve this, we add two directives:

proxy_cache_lock– Restricts the number of concurrent attempts to populate the cache, so that when a cached entry is being created, further requests for that resource are queued up in NGINXproxy_cache_use_stale– Configures NGINX to serve stale (currently cached) content while a cached entry is being updated

Together with the caching directives we added previously, this give us the following server configuration:

server {

proxy_cache one;

proxy_cache_lock on;

proxy_cache_valid 200 1s;

proxy_cache_use_stale updating;

...

}

The change in benchmarking results is significant. The number of requests per second jumps from 600 to nearly 2200:

root@nginx-client:~#

ab -c 10 -t 30 -n 100000 -k http:/

ginx-server/

Concurrency Level: 10

Time taken for tests: 30.001 seconds

Complete requests: 65553

Failed requests: 0

Keep-Alive requests: 0

Total transferred: 3728905623 bytes

HTML transferred: 3712974057 bytes

Requests per second:

2185.03

[#/sec] (mean)

Time per request: 4.577 [ms] (mean)

Time per request: 0.458 [ms] (mean, across all concurrent requests)

Transfer rate: 121379.72 [Kbytes/sec] received

CPU utilization is much lower (note the idle time in theidcolumn undercpu):

root@nginx-server:/var/www/html#

vmstat 3

procs -----------memory---------- ---swap-- -----io---- -system--- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

1 0 0 106512 53192 641116 0 0 0 37 11016 3727 19 45

36

0 0

1 0 0 105832 53192 641808 0 0 0 68 17116 3521 13 56

31

0 0

1 0 0 104624 53192 643132 0 0 0 64 14120 4487 15 51

33

0 0

The transfer rate (121379.72 Kbytes/sec, or 121 MBps) equates to 0.97 Gbps, so the test is network bound. With an average CPU utilization of 66%, the peak performance for this server would be approximately 2185/0.66 = 3300 requests/second:

Also observe the consistent response times reported byab(the standard deviation is only 8.1 ms), and the small number of requests (16) forwarded to the upstream server during the 30-second test reported by the dashboard:

Why just 16 requests? We know that the cache times out after 1 second, and that updates can take up to 0.661 seconds (fromabresults), so we can predict that updates will occur no more frequently than every 1.66 seconds. Over a 30‑second period, you’d expect no more than 18 (30/1.66) requests.